If you want to keep your pages in the SERP, you have to make sure they're indexed often.

So then, with thousands of pages, how do you ensure that bots visit the most important ones on your site - especially given that they’ll spend only a limited time crawling your site?

Solution: crawl budget optimization.

In this post, I will focus on improving your internal links and pagination as a way to ensure bots index the pages you've deemed most important.

We'll review:

Why Is a Crawl Budget Important For SEO?

As Mitul put it in an earlier post about available crawl budget:

Crawl budget isn’t something we SEOs think about very often. And, if you aren't familiar with the term 'crawl budget', it doesn't mean money available.”

While crawl budget might not have such direct influence on your metrics or results, wasting crawl budget can seriously impact your pages’ visibility in SERPs, and in turn, severely affect your other efforts.

Recommended Reading: 5 Strategies to Make the Most of Your Available Crawl Budget

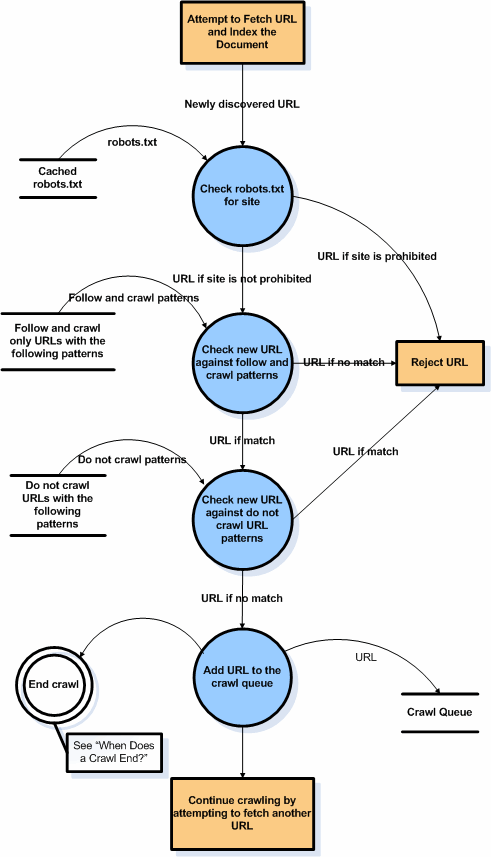

To demonstrate this, here’s the process Googlebot goes through when crawling a site, according to Google:

Not only is this a complex process but there also is a striking number of factors that can deter bots from indexing a particular URL. In the graph, Google refers to them as crawl patterns -- factors that:

“[…] prohibit the crawler from following and indexing particular URLs.” (Source)

We can easily assume that the search engine refers to a deliberate action by a webmaster that blocks a bot from accessing the site. This typically happens in a robots.txt file. But, there are other aspects can affect the crawl as well.

Popularity is one of those factors. According to many SEOs, popular domains get crawled more often.

Freshness is another factor, which ties with what I said about popularity. Google always wants to include the most current information about a page. This means that the more often you update your content, the more likely it is to get crawled.

Freshness and popularity aside, one thing is clear:

Poor site structure (from incorrect pagination and interlinking)

may prevent search engines from crawling and indexing your site.

Another issue to consider? Duplicate content.

Your crawl budget is adversely affected by duplicate content on your site. Time and time again, we've mentioned how duplicate content can kill your search performance.

Recommended Reading: Crawlability Issues and How to Find Them

Let’s look at how fixing those issues through crawl budget optimization will help boost your visibility.

Ways to Optimize Your Crawl Budget With Internal Links

Fact: Internal links boost the user experience.

They provide web visitors with reference to additional content they might find useful. In the process, internal links inform Google of other relevant pages on your site and even the keywords for which you’d like them to rank.

In fact, there are multiple benefits to internal linking.

But, they also help with your indexing and crawl budget. Here’s how:

#1. Analyze Internal Links To Remove Crawler Roadblocks

This goes without saying - broken links and long redirect chains will stop bots in their tracks. But what you may not realize is that, at the same time, sending bots to chase URLs that simply do not exist will waste precious crawl budget.

Recommended Reading: How to Increase Crawl Efficiency

Let me put the severity of this issue into perspective.

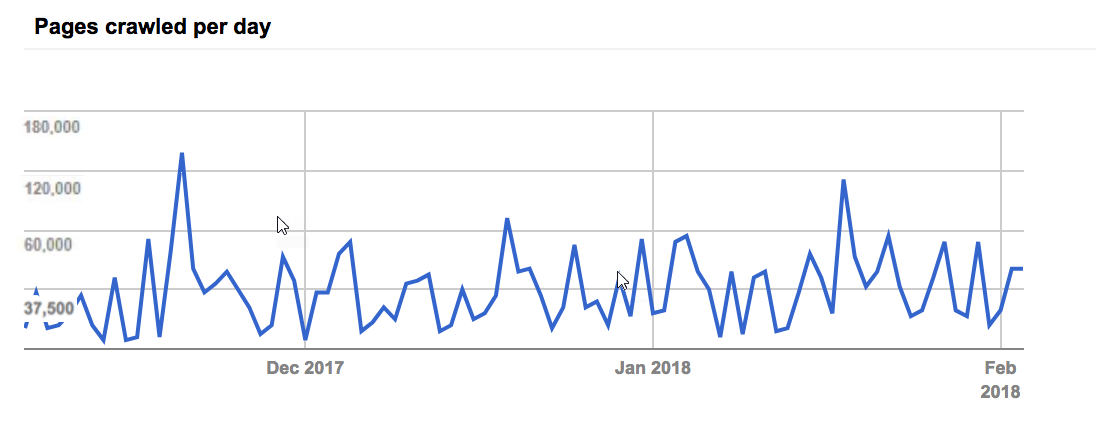

Imagine that your site has 100,000 URLs. Now, your Google Search Console suggests that the search engine typically crawls 1000 URLs a day (with some of them, like the homepage, more often than others.)

A simple calculation reveals that it would take it over 3 months to index all content just once.

A simple calculation reveals that it would take it over 3 months to index all content just once.

Now, as we’ve said before, you can optimize your site to make the most of this crawl budget. This typically involves blocking certain content from being crawled. But also, removing broken links and redirects that would send crawlers to non-existing URLs.

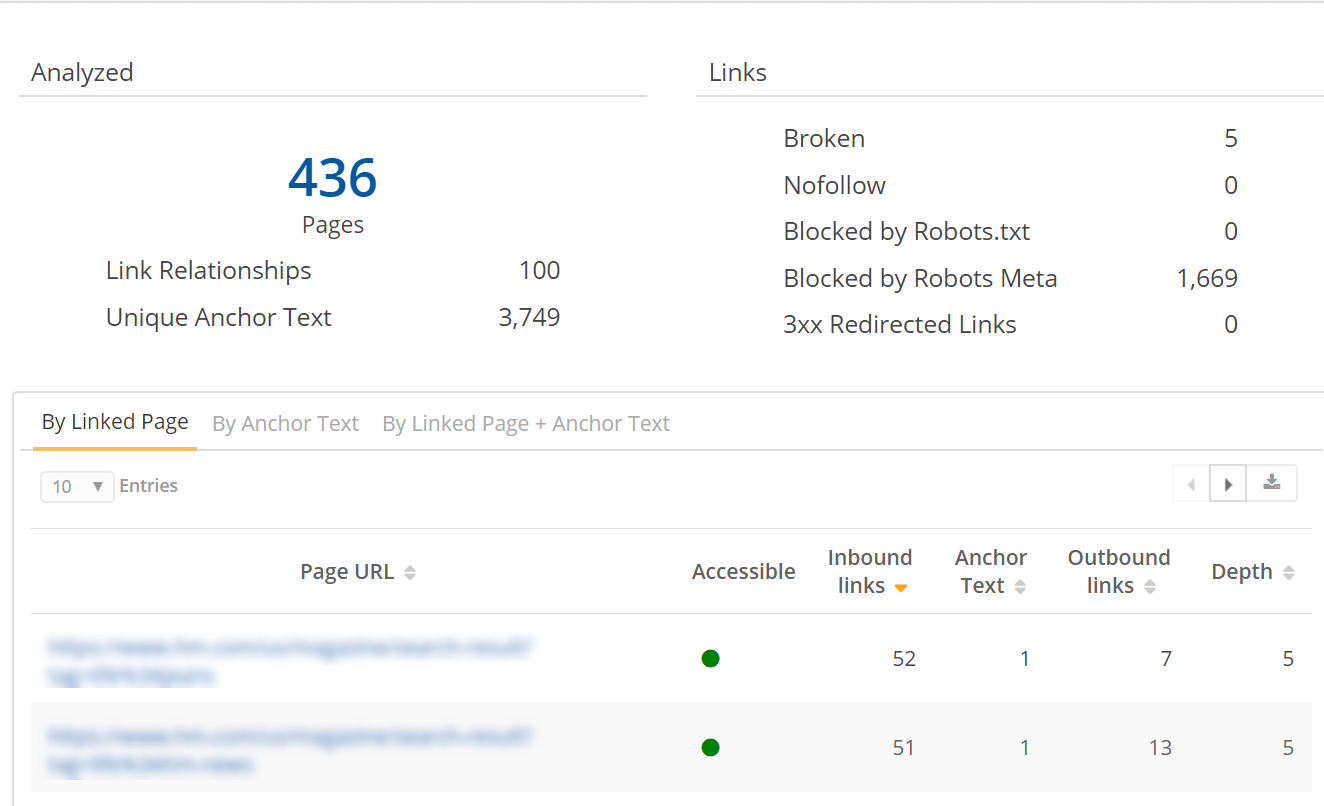

Even a simple internal links audit could help you identify and fix issues with URLs, such as hyperlinks including relative URLs that might cause crawling and indexation issues.

In turn, prevent bots from wasting time on pages they shouldn’t concern themselves with.

(seoClarity’s Clarity Audits report showing issues with relative URLs on a site.)

#2. Use Internal Links to Point Bots to Key Pages Faster

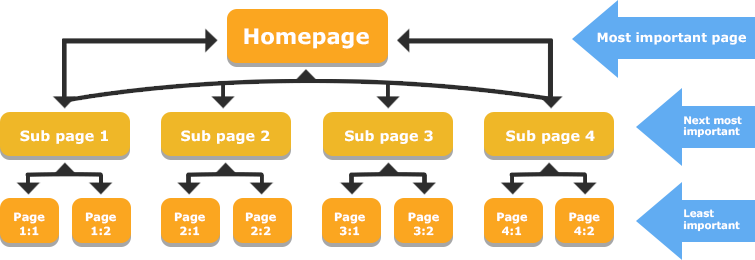

Take a look at a typical, hierarchical site structure. It’s one of the most popular ways to organize data on a website.

At a quick glance it reveals that a bot accessing a URL will most likely visit the homepage and the next level of content. But whether it visits anything after that is really down to pure chance, isn’t it?

Well, that or a useful interlinking strategy.

Because you can help bots reach crucial pages located deeper in your site’s structure by interlinking them with the most authoritative content.

Bots travel through sites on interlinks. And so, by referencing key pages or category-level assets, you can point bots to them much faster, and ensure quicker crawl, regardless of the crawl budget.

Of course, creating and implementing deep links on large sites typically requires a substantial amount of time and the assistance of a dev team. That's why we created Link Optimizer which automatically optimizes internal links for thousands of pages to increase discoverability.

#3. Identify Pages with Too Many Internal Links

Now, there isn't a set rules for the number of internal links you should or shouldn't have on a page.

Content with three internal links could easily rank as good as one with hundreds.

(Although, as a general rule, you shouldn’t have more than 100 internal references per page. It’s certainly one of the issues we verify in our site audit capability, Clarity Audits.)

That’s rankings and your search visibility. When it comes to crawling, the issue is quite different.

For one, too many URLs could distract a bot, sending it in all directions, instead of sections of the site you’d like it to index faster. With our built-in crawler and site audit technology you can quickly identify pages with too many internal links that could point the search engine's crawl in a wrong direction.

#4. Use Interlinking to Support Site Structure and Ensure Bots Reach Authoritative Pages

This goes without saying, doesn’t it? The most popular content on your site has significantly more links than the rest. And so, linking to low-performance pages from those highly authoritative content will pass at least some of that credibility on to them.

But in turn, it will also suggest those pages to the Googlebot to crawl and index.

Simple, right? Unfortunately, there’s a catch:

With thousands of pages, identifying and linking to a handful of weak pages you’d like search engines to index is next to impossible.

Instead, you need to distribute internal links a way so that it reaches pages deeper in the architecture.

Target top-level content such as category pages, top product pages and so on. Internal links from the homepage and other authoritative content will strengthen them, and in turn, support the overall site structure.

To apply this at scale, we offer Internal Link Analysis to find the number of pages that do not have internal links, optimize anchor text, locate broken internal links, and more.

Recommended Reading: Internal Links Workflow: How to Optimize and Gain More Site Authority

Closing Thoughts

Crawl budget, while important, isn't very worrisome to most SEOs. With that said, when crawl issues arise, your rankings and search visibility faces a negative impact.

Luckily, you can improve the crawl rate to your available budget by analyzing your internal link structure, fixing roadblocks, and pointing Googlebot to relevant sections of the site you’d like indexed more regularly.

Comments

Currently, there are no comments. Be the first to post one!