Googlebot, the search engine's web crawler, constantly scours and indexes web pages, collecting valuable data about your site’s performance. But did you know you can also gain insights by tracking Googlebot’s activity on your site?

Understanding what Googlebot sees allows you to align your SEO strategy more closely with Google’s guidelines. By analyzing bot activity, you not only get a behind-the-scenes look at how your website is perceived by the search engine but can also identify spam bots that might affect your site.

Many technical SEOs overlook log file analysis, missing out on crucial insights that go beyond what a standard site crawl reveals. In this post, I’ll guide you through the process of analyzing server log files to unlock these insights and boost your search performance.

Table of Contents:

- What is Log File Analysis?

- Challenges of Log File Analysis

- How to Analyze Log Files (Using seoClarity)

- Key SEO Insights From Log File Analysis

What is Log File Analysis?

Log file analysis involves examining the server log files, which are records of all requests made to your web server, often referred to as "hits."

This process provides valuable insights into which pages and content on your site Google is crawling, making it an essential tool for understanding how search engines interact with your website.

The information contained in a log file includes:

- Time & Date

- The request IP address

- Response Code

- Referrer

- User Agency

- Requested File

Below is an example of what a server log file looks like (using dummy information):

127.0.0.1 user-identifier frank [10/Oct/2000:13:55:36 -0700] "GET / apache _pb.gif HTTP/1.0" 200 2326

Because a server log file is real information from Googlebot (and other search engine crawlers), the analysis of the log files answers questions like:

- Is my crawl budget spent efficiently?

- What accessibility errors were met during the crawl?

- Where are the areas of crawl deficiency?

- Which are my most active pages?

- Which pages does Google not know about?

These are just a few examples of the insights that you can uncover with log file analysis.

While there are ways to signal to Google how they should crawl a site (e.g. XML sitemap, robot.txts, etc.) finding the answers to these questions can be greatly beneficial in adjusting your strategy to alert Googlebot to your most important pages.

Note: When Googlebot crawls your site, it now looks at HTML and JavaScript.

Challenges of Log File Analysis

Log file analysis comes with its share of challenges. One of the biggest hurdles is accessing bot log files, especially for enterprise-level companies with hundreds of thousands of pages. The sheer volume of data can be overwhelming.

Another challenge is that log file analysis is typically separate from your SEO reporting, meaning you have to manually connect the dots. While it’s possible to do this, it’s far from efficient. Manually analyzing such massive data in Excel limits you to viewing a single day's log file data, making it difficult to spot trends. Plus, the time spent filtering, segmenting, and organizing the data can be substantial.

To make log file analysis truly meaningful, you need a platform that aggregates this data, allowing you to see the bigger picture.

Consider this example: If a website has 5,000 daily visitors, each viewing 10 pages, the server generates 50,000 log file entries every day. Manually sifting through that data is not only cumbersome but also impractical.

By integrating your bot log files into the same tool as your SEO reporting, you can seamlessly connect the dots and derive actionable insights. So, what does this process look like?

How to Analyze Log Files With seoClarity

Integrating your bot log files with your SEO reporting allows you to easily connect the dots, and seoClarity is the only platform that offers a powerful log file analysis solution as part of its core offering.

At seoClarity, your Client Success Manager helps you set up all the appropriate files – essentially, you put the files in, and we go in to pull them. When the files are uploaded into the platform, you can use Bot Clarity, our integrated log-file analyzer, to discover how bots access your site, any issues they may encounter, and how your crawl budget is spent.

We do the heavy lifting so you’re left with the meaningful information.

Bot Clarity

Bot Clarity, our log file analyzer, allows you to correlate bot activity with your site’s rankings and analytics. To uncover these valuable insights, simply navigate to Bot Clarity within the Usability tab of the seoClarity platform.

(Bot Clarity within the seoClarity platform.)

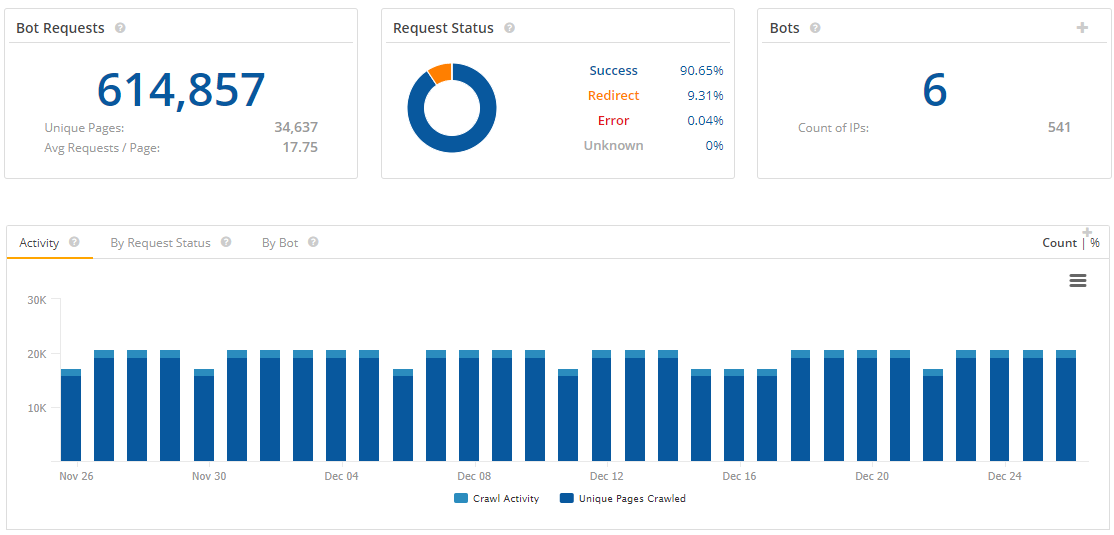

Here, you can discover bot requests, request status, and the number of bots found crawling your site.

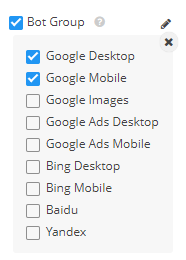

Since we’re mainly concerned with Googlebot, let’s filter down the results by Bot Group to get a deeper understanding of how it crawls and understands the site.

It’s also interesting to see how the different arms of Google (e.g. Google Desktop vs. Google Mobile) are crawling and understanding your site.

(Filter down to see information for specific bots.)

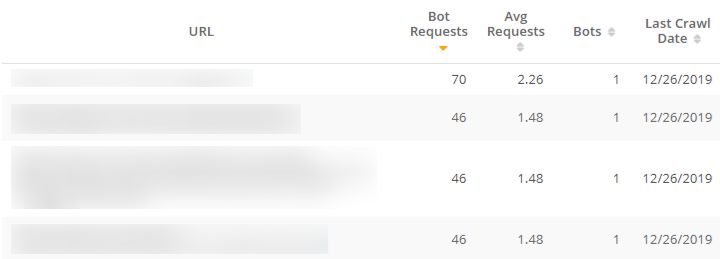

Next, we analyze which URLs Googlebot is crawling, and how often. Now that we know what pages of the site Google is looking at, we can download that data to have on hand. Then, look up your XML sitemap.

Are the pages on your XML sitemap the same pages that Google is crawling? What pages are Google crawling that aren’t on the sitemap where Google may be wasting its time?

(Figure out which pages Googlebot is crawling, and how often.)

Log file analysis goes beyond simply revealing which pages Googlebot is crawling. Let’s explore some additional use cases and the valuable insights they can provide…

Key SEO Insights From Log File Analysis

Log data can be used across a variety of use cases.

Analyzing bot log files lets you see your site how the search engine sees it. This means you can pick up on potential errors and fix them with site updates for the next time the bots come around.

Spoofed Bot Activity

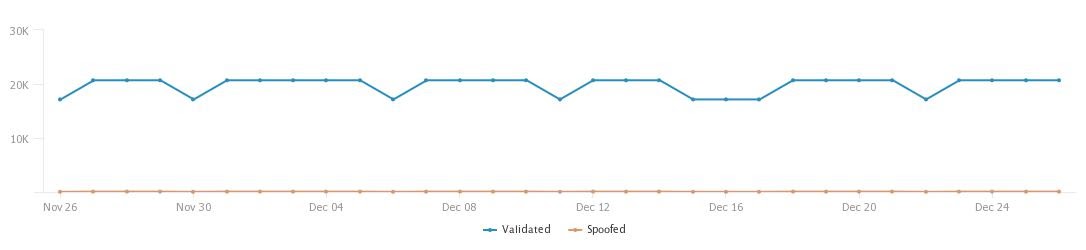

Spoofed Activity refers to any crawl requests from a bot that declares itself as a major search engine but whose IP doesn't match that of the search engine.

Bot Clarity easily flags crawlers that pretend to be Googlebot and use up valuable resources crawling your site. If you do find spam bots, cleaning them up will help optimize your crawl budget and speed up your site's loading time.

(Validated vs. spoofed activity in Bot Clarity.)

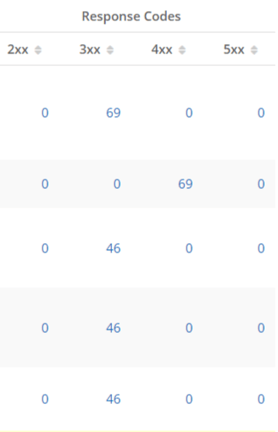

Response Codes

Also check the HTTP status of your website. Know which URLs are working properly, and which are responding with page errors.

2xx response codes mean the request was properly received and accepted, but some response codes indicate an error.

But 3xx, 4xx, and 5xx response codes should be addressed. For example, while one 301 redirect (indicating the page was moved permanently) isn’t a problem, multiple redirects will cause trouble.

Since some response codes are positive, you can filter down the results to specify which response codes you want to see. Here, I’ve filtered down the results to show 3xx and 4xx response codes.

(Response Codes for different site URLs.)

We also suggest looking into your Googlebot crawl rate over time, and how it correlates with response times and the serving error pages.

New Content Discovery

With the log file analyzer, you can group new pages on the site through segmentation, and see exactly when these specific pages have been crawled.

In a matter of days, you can be 100% certain that Google has discovered this new strategic content.

User Agent Filter

The User Agent Filter allows you to select or search for specific user agents by name. You can filter them based on criteria such as is, isn't, contains, does not contain, starts with, ends with, or a Regex pattern.

This helps you pinpoint which search bots are most active on your site. By narrowing your analysis to specific user agents, you can also determine if the bots align with the search engines where you aim to improve your rankings.

Top Crawled Pages

Log file analysis reveals which pages are most frequently crawled by bots, allowing you to ensure that these align with your site’s most important pages.

It’s crucial that your crawl budget isn't wasted on lower-impact pages.

By verifying that Google is focusing on your high-priority pages—the ones with the most products and highest potential for sales—you can optimize your site’s visibility and effectiveness.

Googlebot IP

Lastly, discover what IP addresses Googlebot is using to crawl your site. Verify that the Googlebot is correctly accessing the relevant pages and resources in each case.

Conclusion

Bot log files can take a little work in terms of gathering the data from the correct teams, but once you pipe them into seoClarity and can compare them to your other SEO metrics, you are one step closer to understanding Google and how it understands your site.

To learn more about how Bot Clarity can help you conduct advanced server log-file analysis and understand bot behavior on a whole new level, schedule a demo today!

<<Editor's Note: This post was originally published in January 2020 and has since been updated.>>

Comments

Currently, there are no comments. Be the first to post one!